本文使用开源软件Stable Diffusion UI+ chilloutmix-ni + Lora进行AIGC环境搭建,并且用Stable Diffusion模型为基础训练自定义Lora,先看一些效果,不同年龄段人物、莫奈风格风景都能生成…

准备环境

1、硬件:CPU: 8vcpu,内存: 32 GiB, GPU: 1*NVIDIA V100

2、Ubuntu环境:Ubuntu 22.04.3 LTS3、基础软件:apt install wget git python3 python3-venv libgl1 libglib2.0-0,以及安装anaconda下载Stable Diffusion UI

#登录进ubuntu控制台root@ubuntu:/mnt/workspace#mkdir sd && cd sd && git clone https://github.com/AUTOMATIC1111/stable-diffusion-webui.gitroot@ubuntu:/mnt/workspace/sd/stable-diffusion-webui#conda create -n myenv python=3.10.12root@ubuntu:/mnt/workspace/sd/stable-diffusion-webui#conda activate myenv#webui.sh 不能用root账户运行,加-f(myenv) root@ubuntu:/mnt/workspace/sd/stable-diffusion-webui# bash webui.sh -f#说明:webui.sh->launch.py->launch_utils.py, 启动过程中会从github下载pytorch, openclip, stable diffusion以及其它依赖package,中间因为github连接问题,有可能会有error,继续运行上述命令即可。launch_utils.py里面的相关下载依赖·:

下载之后的模型和配置文件,保存在repositories目录

当出现如下字样,表示Stable Diffusion基础环境已经搭建好

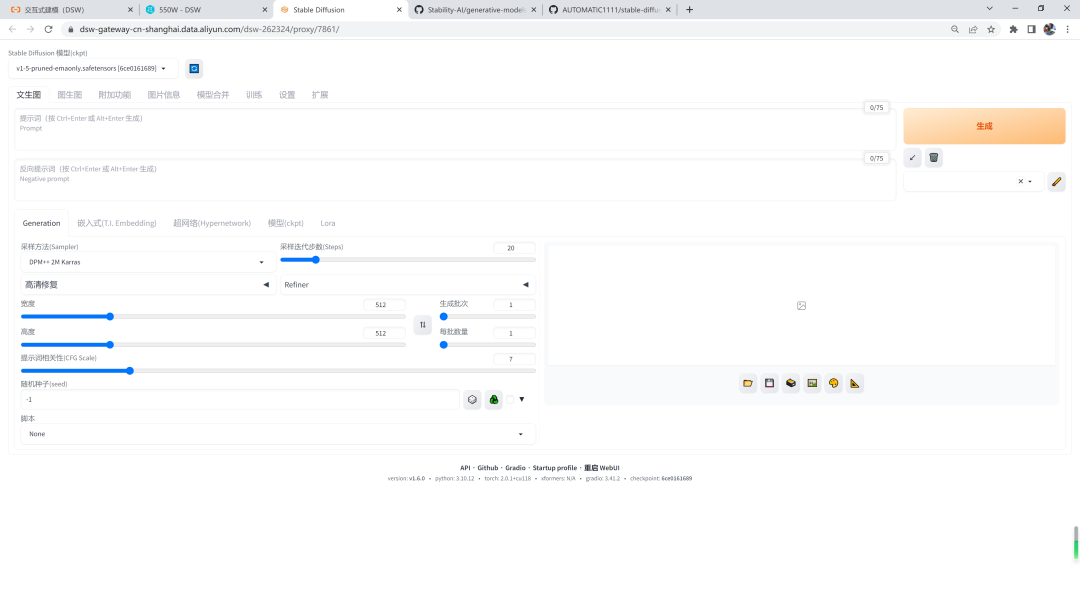

运行Stable Diffusion UI

环境环境启动时候默认下载的是v1-5-pruned-emaonly.safetensors,是从huggingface.co下载,默认在sd_model.py中配置:

model_url = “https://huggingface.co/runwayml/stable-diffusion-v1-5/resolve/main/v1-5-pruned-emaonly.safetensors”终于来了,输入魔法(prompt): a man, handsome,点击Generate就可以根据prompt生成下图:

汉化Stable Diffusion UI(可选)

根据个人选择是否要汉化,在交友网站github上找到stable-diffusion-webui-localization-zh_CN,使用步骤如下:

#切换到extensions目录(myenv) root@ubuntu:/mnt/workspace/sd/stable-diffusion-webui/extensions# git clone https://github.com/dtlnor/stable-diffusion-webui-localization-zh_CNCloning into stable-diffusion-webui-localization-zh_CN…remote: Enumerating objects: 958, done.remote: Counting objects: 100% (461/461), done.remote: Compressing objects: 100% (131/131), done.remote: Total 958 (delta 325), reused 400 (delta 281), pack-reused 497Receiving objects: 100% (958/958), 833.18 KiB | 1.55 MiB/s, done.Resolving deltas: 100% (517/517), done.回到页面,转到“Settings”标签,Reload UI, 在“User Interface”就可以发现zh_CN, 选中,Apply settings,再次Reload UI,页面就是简中的

简体中文:

chilloutmix-ni模型

使用了一下SD 1.5对亚洲面孔生成的效果不怎么好,国内大部分都用chilloutmix-ni模型,到huggingface去下载,地址:https://huggingface.co/swl-models/chilloutmix-ni

#切换到models目录(myenv) root@ubuntu:/mnt/workspace/sd/stable-diffusion-webui#cd models因为模型文件好几个g比较大,需要安装lfs(myenv) root@ubuntu:/mnt/workspace/sd/stable-diffusion-webui/models/Stable-diffusion# git lfs installUpdated git hooks.Git LFS initialized.#如下命令只clone小文件(myenv) root@ubuntu:/mnt/workspace/sd/stable-diffusion-webui/models# GIT_LFS_SKIP_SMUDGE=1 git clone https://huggingface.co/swl-models/chilloutmix-ni(myenv) root@ubuntu:/mnt/workspace/sd/stable-diffusion-webui/models#cd chilloutmix-ni#在只下载某一个大文件(myenv) root@ubuntu:/mnt/workspace/sd/stable-diffusion-webui/models/chilloutmix-ni#git lfs pull –include=”chilloutmix-Ni.safetensors”#制定通配符下载文件git lfs pull –include=”*.safetensors”到页面用新模型

chilloutmix-Ni模型是针对亚洲人做的优化,国内大部分人在使用,以及各种MCN也是用这个模型,接下来介绍Lora

Lora

Lora在基础模型上的微调、ESRGAN (高清修复算法)、VAE也是微调,下载后放到指定目录,然后再Setting页面选择设置。

Lora可以在 https://tusi.art/ 找到很多类似的想要的模型下载,也可以在hugging face clone下载,下载放到models/Lora 目录即可,在页面刷新即可使用(吐司或者huggingface有可能有限制,可以自行找其它site代替)

下载的模型也可以预设权重去使用,example:

模型训练

基础准备

#有时候github访问不稳定,可通过https://ghproxy.com代理访问,用法https://ghproxy.com/https://github.com/xxx(注意不要带后缀.git)

#新建training目录和clone kohya_ss

root@ubuntu:/mnt/workspace/aigc#mkdir training && cd training && git clone https://ghproxy.com/https://github.com/bmaltais/kohya_ss

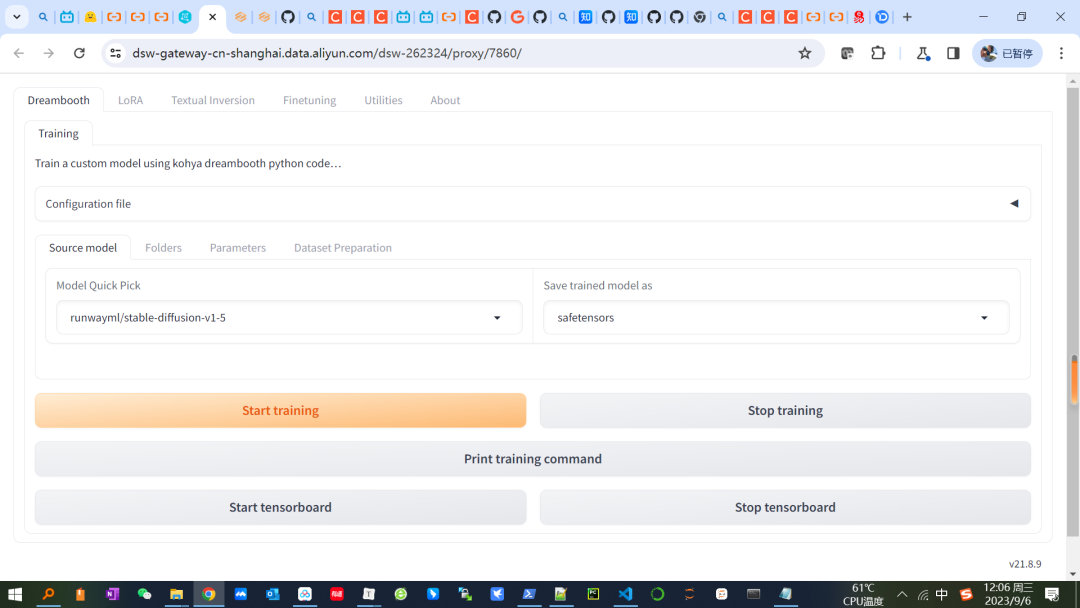

#新建和激活新虚拟环境root@ubuntu:/mnt/workspace/aigc/training/kohya_ss#python3 -m venv tutorial-env1root@ubuntu:/mnt/workspace/aigc/training/kohya_ss# source tutorial-env1/bin/activate安装依赖#先运行依赖:(tutorial-env1) root@ubuntu:/mnt/workspace/aigc/training/kohya_ss# pip install -r requirments.txt#再运行setup.sh提示安装python3-tk(注意如果没有运行pip install -r requirments.txt的情况下,可能会提示安装python3-tk)(tutorial-env1) root@ubuntu:/mnt/workspace/aigc/training/kohya_ss# ./setup.sh Skipping git operations.Ubuntu detected.This script needs YOU to install the missing python3-tk packages. Please install with:sudo apt update -y && sudo apt install -y python3-tk(tutorial-env1) root@ubuntu:/mnt/workspace/aigc/training/kohya_ss# sudo apt update -y && sudo apt install -y python3-tk备注:中间会有提示是哪个区域,比如亚洲,以及市区等选择,选择后继续,就可以成功安装运行.setup.sh(tutorial-env1) root@ubuntu:/mnt/workspace/aigc/training/kohya_ss# ./setup.sh Skipping git operations.Ubuntu detected.Python TK found…Switching to virtual Python environment.11:47:23-690356 INFO Installing python dependencies. This could take a few minutes as it downloads files. 11:47:23-694572 INFO If this operation ever runs too long, you can rerun this script in verbose mode to check. 11:47:23-695810 INFO Version: v21.8.9 11:47:23-696959 INFO Python 3.10.6 on Linux 11:47:23-698672 INFO Installing modules from requirements_linux.txt… 11:47:23-699868 INFO Installing package: torch==2.0.1+cu118 torchvision==0.15.2+cu118 –extra-index-url https://download.pytorch.org/whl/cu118 启动用这个命令启动./gui.sh –listen 127.0.0.1 –server_port 7861 –inbrowser –share(tutorial-env1) root@ubuntu:/mnt/workspace/aigc/training/kohya_ss# ./gui.sh –listen 127.0.0.1 –server_port 7860 –inbrowser –sharevenv folder does not exist. Not activating…11:56:31-270322 INFO Version: v21.8.9 11:56:31-275309 INFO nVidia toolkit detected 11:56:32-664282 INFO Torch 2.0.1+cu118 11:56:32-696248 INFO Torch backend: nVidia CUDA 11.8 cuDNN 8700 11:56:32-706578 INFO Torch detected GPU: Tesla V100-SXM2-16GB VRAM 16160 Arch (7, 0) Cores 80 11:56:32-707970 INFO Verifying modules instalation status from /mnt/workspace/aigc/training/kohya_ss/requirements_linux.txt… 11:56:32-711037 INFO Verifying modules instalation status from requirements.txt… no language11:56:35-271776 INFO headless: False 11:56:35-276048 INFO Load CSS… Running on local URL: http://127.0.0.1:7860Running on public URL: https://ce46f27d36a34e1201.gradio.liveThis share link expires in 72 hours. For free permanent hosting and GPU upgrades, run `gradio deploy` from Terminal to deploy to Spaces (https://huggingface.co/spaces)注意这个链接可以在其它地方访问:Running on public URL: https://ce46f27d36a34e1201.gradio.live启动后的页面:

图像预处理

用stable-diffusion-webui进行图像预处理注意:这个目录不能有下划线,否则会提示错误,把准备好的图像数据集放到/mnt/workspace/aigc/training/trainingset,勾选自动焦点裁切和使用Blip选型

训练配置和准备

建三个目录1、模型的训练图片 注意:这个目录有一个格式要求,比如在这个目录/mnt/workspace/aigc/training/trainingsetoutput,下面新建目录17,17下面是17个训练图片2、模型输出目录 mnt/workspace/aigc/training/models3、日志目录mnt/workspace/aigc/training/log详见下面的lora.json文件编辑一个lora.json

放到一个目录,比如/mnt/workspace/aigc/training/lora.json

{“pretrained_model_name_or_path”: “runwayml/stable-diffusion-v1-5”,“v2”: false,“v_parameterization”: false,“logging_dir”: “mnt/workspace/aigc/training/log”,“train_data_dir”: “/mnt/workspace/aigc/training/trainingsetoutput”,“reg_data_dir”: “”,“output_dir”: “mnt/workspace/aigc/training/models”,“max_resolution”: “512,512”,“learning_rate”: “0.0001”,“lr_scheduler”: “constant”,“lr_warmup”: “0”,“train_batch_size”: 2,“epoch”: “1”,“save_every_n_epochs”: “1”,“mixed_precision”: “bf16”,“save_precision”: “bf16”,“seed”: “1234”,“num_cpu_threads_per_process”: 2,“cache_latents”: true,“caption_extension”: “.txt”,“enable_bucket”: false,“gradient_checkpointing”: false,“full_fp16”: false,“no_token_padding”: false,“stop_text_encoder_training”: 0,“use_8bit_adam”: true,“xformers”: true,“save_model_as”: “safetensors”,“shuffle_caption”: false,“save_state”: false,“resume”: “”,“prior_loss_weight”: 1.0,“text_encoder_lr”: “5e-5”,“unet_lr”: “0.0001”,“network_dim”: 128,“lora_network_weights”: “”,“color_aug”: false,“flip_aug”: false,“clip_skip”: 2,“gradient_accumulation_steps”: 1.0,“mem_eff_attn”: false,“output_name”: “Addams”,“model_list”: “runwayml/stable-diffusion-v1-5”,“max_token_length”: “75”,“max_train_epochs”: “”,“max_data_loader_n_workers”: “1”,“network_alpha”: 128,“training_comment”: “”,“keep_tokens”: “0”,“lr_scheduler_num_cycles”: “”,“lr_scheduler_power”: “”}加载配置

从界面选择/mnt/workspace/aigc/training/lora.json,就可以加载文件

注意如果是用上述的lora.json文件,如果是自定义的模型,需要在重新修改为:

模型训练

模型输出在指定的目录/mnt/systemDisk/aigc/training/models/mark.safetensors,复制到目录/mnt/workspace/aigc/stable-diffusion-webui/models/Stable-diffusion,刷新stable-diffusion-webui,就可以加载模型新模型使用

至此就搭建一个本地可运行的AIGC,使用Stable Diffusion + chilloutmix-ni + Lora,搭建一个可以玩耍的文生图工具。通过bmaltais/kohya_ss来搭建一个模型训练环境。用不同的prompt生成一些图,图形的效果很大程度上依赖于如何写prompt,文首的效果prompt:

prompt:best quality, masterpiece, photorealistic:1, 1girl, dramatic lighting, from below,((((gorgeous)))) royal dress, ((((gorgeous)))) gold tiara, ((((gorgeous)))) gold necklace, ((((gorgeous)))) gold accessories, light on body,Extraordinary details,A girl, ((The girl wears a robe)),(He was covered in chain mail:1.4),(Colorful clothing:1.5),(Cloth trousers:1.2),Perfect facial features,delicated face,long whitr hair, As graceful as a swan, wisdom, mod, Rainbow ,scutes, banya, fire, In the background is an ancient forest, Mysterious connection, Aegis, Trust,Realistic image quality,photorealestic,8K,best qualtiy,tmasterpiece,movie-level quality,High chiaroscuro,rendering by octane,jellyfishforest,Confident pretty girl in long white dress with low color and flowing hairnegtive prompt: nsfw, (ng_deepnegative_v1_75t:1.2),(badhandv4:1),(worst quality:2),(low quality:2),(normal quality:2),lowres,bad anatomy,bad hands,watermark,moles,toe,bad-picture-chill-75v,realisticvision-negative-embeddingprompt: A 60-year-old man, There are scars on the face, Wearing a spacesuit, Gaze at the audience, The background is a flying machine explosion, Star Wars scenes, The dark universe, lonely, Epic, Warfarenegtive prompt: lowres,bad anatomy,bad hands,text,error,missing fingers,extra digit,fewer digits,cropped,worst quality,low quality,normal quality,jpeg artifacts,signature,watermark,username,blurry,(chiaroscuro:1.3),(makeup:1.2),(hair shadow:1.1),(hands:1.6)